Following my talk last week at the Texas State Philosophy Symposium, details have now been finalized for another talk at Texas State: this time in the context of the Philosophy Department’s Dialogue Series, where I’ll be talking about post-cinema (i.e. post-photographic moving image media such as video and various digital formats) and what I’ve been arguing is an essentially post-phenomenological system of mediation (see, for example, my talk from the 2013 SCMS conference or these related musings). For anyone who happens to be in the area, the talk will take place on Monday, April 14, 2014 at 12:30 pm (in Derrick Hall 111). UPDATE: The time has been changed to 10:00 am.

Category: Theory

Philosophy of Science De-Naturalized: Notes towards a Postnatural Philosophy of Media (full text)

As I recently announced, I was invited to give the keynote address at the 17th annual Texas State University Philosophy Symposium. Here, now, is the full text of my talk:

Philosophy of Science De-Naturalized: Notes towards a Postnatural Philosophy of Media

Shane Denson

The title of my talk contains several oddities (and perhaps not a few extravagances), so I’ll start by looking at these one by one. First (or last) of all, “philosophy of media” is likely to sound unusual in an American context, but it denotes an emerging field of inquiry in Europe, where a small handful of people have started referring to themselves as philosophers of media, and where there is even a limited amount of institutional recognition of such appellations. In Germany, for example, Lorenz Engell has held the chair of media philosophy at the Bauhaus University in Weimar since 2001. He lists as one of his research interests “film and television as philosophical apparatuses and agencies” – which, whatever that might mean, clearly signals something very different from anything that might conventionally be treated under the heading of “media studies” in the US. On this European model, media philosophy is related to the more familiar “philosophy of film,” but it typically broadens the scope of what might be thought of as media (following provocations from thinkers like Niklas Luhmann, who treated everything from film and television to money, acoustics, meaning, art, time, and space as media). More to the point, media philosophy aims to think more generally about media as a philosophical topic, and not as mere carriers for philosophical themes and representations – which means going beyond empirical determinations of media and beyond concentrations on media “contents” in order to think about ontological and epistemological issues raised by media themselves. Often, these discussions channel the philosophy of science and of technology, and this strategy will indeed build the bridge in my own talk between the predominantly European idea of “media philosophy” and the context of Anglo-American philosophy.

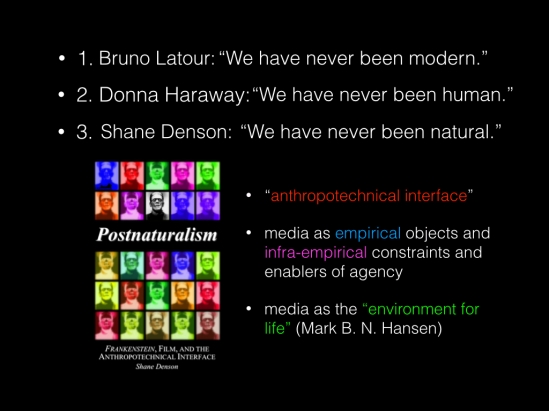

OK, but if the idea of a philosophy of media isn’t weird enough, I’ve added this weird epithet: “postnatural.” The meaning of this term is really the crux of my talk, but I’m only going to offer a few “notes towards” a postnatural theory, as it’s also the crux of a big, unwieldy book that I have coming out later this year, in which I devote some 400 pages to explaining and exploring the idea of postnaturalism. As a first approach, though, I can describe the general trajectory through a series of three heuristic (if oversimplifying) slogans.

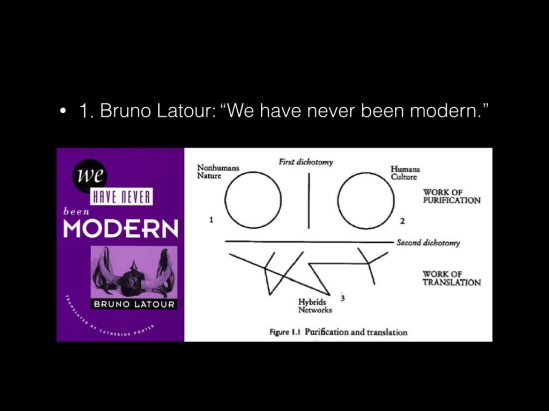

First, in response to debates over the alleged postmodernity of (Western) societies at the end of the twentieth century, French sociologist and science studies pioneer Bruno Latour, most famous for his association with so-called actor-network theory, claimed in his 1991 book of the same title that “We have never been modern.” What he meant, centrally, was that the division of nature and culture, nonhuman and human, that had structured the idea of modernity (and of scientific progress), could not only be seen crumbling in contemporary phenomena such as global warming and biotechnology – humanly created phenomena that become forces of nature in their own right – but that the division was in fact an illusion all along. We have never been modern, accordingly, because modern scientific instruments like the air pump, for example, were simultaneously natural, social, and discursive phenomena. The idea of modernity, according to Latour, depends upon acts of purification that reinforce the nature/culture divide, but an array of hybrids constantly mix these realms. In terms of a philosophy of media, one of the most important conceptual contributions made by Latour in this context is the distinction between “intermediaries” and “mediators.” The former are seen as neutral carriers of information and intentionalities: instruments that expand the cognitive and practical reach of humans in the natural world while leaving the essence of the human untouched. Mediators, on the other hand, are seen to decenter subjectivities and to unsettle the human/nonhuman divide itself as they participate in an uncertain negotiation of these boundaries.

The NRA, with their slogan “guns don’t kill people, people kill people,” would have us believe that handguns are mere intermediaries, neutral tools for good or evil; Latour, on the other hand, argues that the handgun, as a non-neutral mediator, transforms the very agency of the human who wields it. That person takes up a very different sort of comportment towards the world, and the transformation is at once social, discursive, phenomenological, and material in nature.

With Donna Haraway, we could say that the human + handgun configuration describes something on the order of a cyborg, neither purely human nor nonhuman. And Haraway, building on Latour’s “we have never been modern,” ups the ante and provides us with the second slogan: “We have never been human.” In other words, it’s not just in the age of prosthetics, implants, biotech, and “smart” computational devices that the integrity of the human breaks down, but already at the proverbial dawn of humankind – for the human has co-evolved with other organisms (like the dog, who domesticated the human just as much as the other way around). From an ecological as much as an ideological perspective, the human fails to describe anything like a stable, well-defined, or self-sufficient category.

Now the third slogan, which is my own, doesn’t so much try to outdo Latour and Haraway as to refocus some of the themes that are inherent in these discussions. Postnaturalism, in a nutshell, is the idea not that we are now living beyond nature, whatever that might mean, but that “we have never been natural” (and neither has nature, for that matter). Human and nonhuman, natural and unnatural agencies are products of mediations and symbioses from the very start, I contend. In order to argue for these claims I take a broadly ecological view and focus not on discrete individuals but on what I call the anthropotechnical interface (the phenomenal and sub-phenomenal realm of mediation between human and technical agencies, where each impinges upon and defines the other in a broad space or ecology of material interaction). This view, which I develop at length in my book, allows us to see media not only as empirical objects, but as infra-empirical constraints and enablers of agency such that media may be described, following Mark Hansen, as the “environment for life” itself. Accordingly, media-technical innovation translates into ecological change, transforming the parameters of life in a way that outstrips our ability to think about or capture such change cognitively – for at stake in such change is the very infrastructural basis of cognition and subjective being. So postnaturalism, as a philosophy of media and mediation, tries to think about the conditions of anthropotechnical evolution, conceived as the process that links transformations in the realm of concrete, apparatic media (such as film and TV) with more global transformations at a quasi-transcendental level. Operating on both empirical and infra-empirical levels, media might be seen, on this view, as something like articulators of the phenomenal-noumenal interface itself.

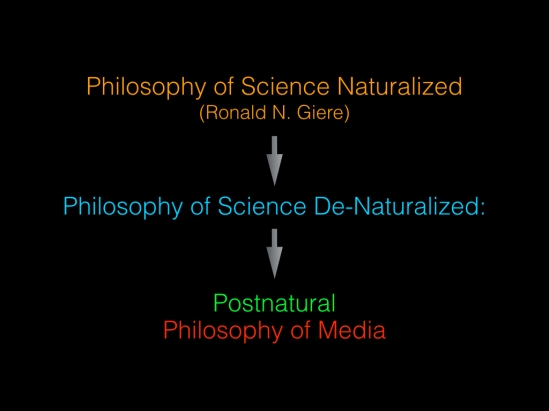

So the more I unpack this thing, the weirder it gets, right? Well, let me approach it from a different angle. Here’s where the first part of my title comes into play: “Philosophy of Science De-Naturalized.” Now, I mentioned before that postnaturalism does not postulate that we are living “after” nature; what I want to emphasize now is that it also remains largely continuous with naturalism, conceived broadly as the idea that the cosmos is governed by material principles which are the object, in turn, of natural science. And, more to the point, the first step in the derivation of a properly postnatural theory, which never breaks with the idea of a materially evolving nature, is to work through a naturalized epistemology, in the sense famously articulated by Willard V. O. Quine, but to locate within it the problematic role of technological mediation. By proceeding in this manner, I want to avoid the impression that a postnatural theory is based on a merely discursive “deconstruction” of nature as a concept. Against the general thrust of broadly postmodernist philosophies, which might show that our ideas of nature and its opposites are incoherent, mine is meant to be a thoroughly materialist account of mediation as a transformative force. So the “Philosophy of Science De-Naturalized,” as I put it here, marks a particular trajectory that takes off from what Ronald Giere has called “Philosophy of Science Naturalized” and works its way towards a properly postnatural philosophy of media.

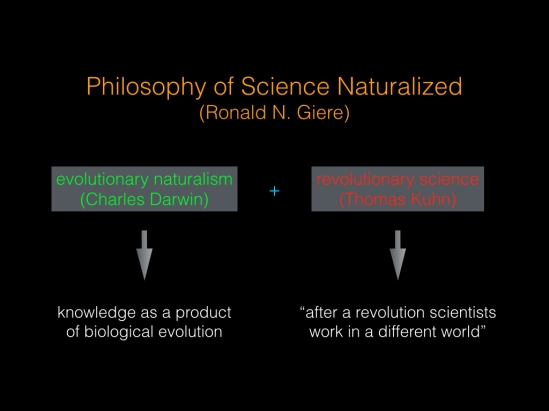

Giere’s naturalized philosophy of science is of interest to me because it aims to coordinate evolutionary naturalism (in the sense of Darwin) with revolutionary science (in the sense of Thomas Kuhn). In other words, it aims to reconcile the materialism of naturalized epistemology with the possibility of radical transformation, which Kuhn sees taking place with scientific paradigm shifts, and which I want to attribute to media-technical changes. Taking empirical science as its model, and taking it seriously as an engagement with a mind-independent reality, an “evolutionary epistemology” posits a strong, causal link between the material world and our beliefs about it, seeing knowledge as the product of our biological evolution. Knowledge (and, at the limit, science) is accordingly both instrumental or praxis-oriented and firmly anchored in “the real world.” As a means of survival, it is inherently instrumental, but in order for this instrumentality to be effective – and/or as the simplest explanation of such effectivity – the majority of our beliefs must actually correspond to the reality of which they form part. But, according to Kuhn’s view of paradigm shifts, “after a revolution scientists work in a different world” (Structure of Scientific Revolutions 135). This implies a strong incommensurability thesis that, according to critics like Donald Davidson, falls into the trap of idealism, along with its attendant consequences; i.e. if paradigms structure our experience, revolution implies radical relativism or else skepticism. So how can revolutionary transformation be squared with the evolutionary perspective?

Convinced that it contains important cues for a theory of media qua anthropotechnical interfacing, I would like to look at Giere’s answer in some detail. Asserting that “[h]uman perceptual and other cognitive capacities have evolved along with human bodies” (384), Giere’s is a starkly biology-based naturalism. Evolutionary theory posits mind-independent matter as the source of a matter-dependent mind, and unless epistemologists follow suit, according to Giere, they remain open to global arguments from theory underdetermination and phenomenal equivalence: since the world would appear the same to us whether it were really made of matter or of mind-stuff, how do we know that idealism is not correct? And because idealism contradicts the materialist bias of physical science, how do we know that scientific knowledge is sound? According to Giere, we can confidently ignore these questions once the philosophy of science has itself opted for a scientific worldview. Of course, the skeptic will counter that naturalism’s methodologically self-reflexive relation to empirical science renders its argumentation circular at root, but Giere turns the tables on skeptical challenges, arguing that they are “equally question-begging” (385). Given the compelling explanatory power and track record of modern science and evolutionary biology in particular, it is merely a feigned doubt that would question the thesis that “our capacities for operating in the world are highly adapted to that world” (385); knowledge of the world is necessary for the survival of complex biological organisms such as we are. But because this is essentially a transcendental argument, it does not break the circle in which the skeptic sees the naturalist moving; instead, it asserts that circularity is an inescapable consequence of our place in nature. In large part, this is because “we possess built-in mechanisms for quite direct interaction with aspects of our environment. The operations of these mechanisms largely bypass our conscious experience and linguistic or conceptual abilities” (385).

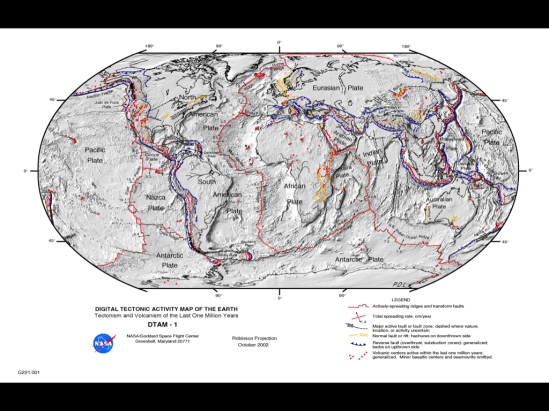

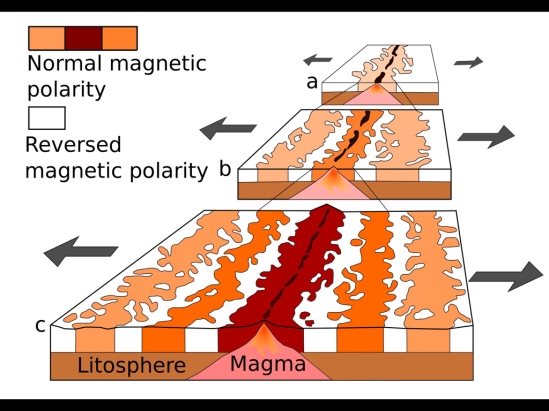

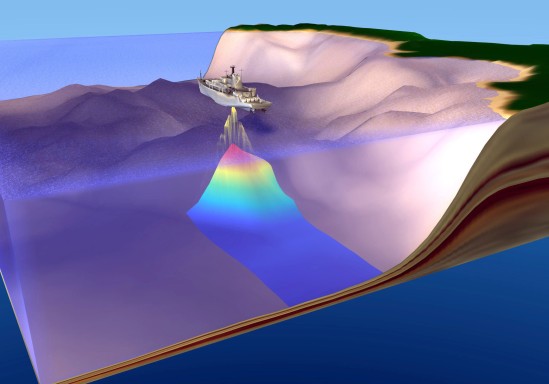

So much for the evolutionary perspective, but where does revolutionary science fit into the picture? To answer this question, Giere turns to the case of the geophysical revolution of the 1960s, when a long established model of the earth as a once much warmer body that had cooled and contracted, leaving the oceans and continents more or less fixed in their present positions, was rapidly overturned by the continental drift model that set the stage for the now prevalent plate tectonics theory (391-94). The matching coastlines of Africa and South America had long suggested the possibility of movement, and drift models had been developed in the early twentieth century but were left, by and large, unpursued; it was not just academic protectionism that preserved the old model but a lack of hard evidence capable of challenging accepted wisdom – accepted because it “worked” well enough to explain a large range of phenomena.

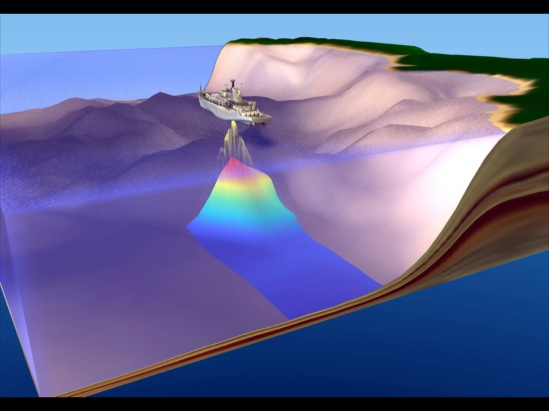

The discovery in the 1950s of north-south ocean ridges suggested, however, a plausible mechanism for continental drift: if the ridges were formed, as Harry Hess suggested, by volcanism, then “sea floor spreading” should be the result, and the continents would be gradually pushed apart by its action. The discovery, also in the 1950s, of large-scale magnetic field reversals provided the model with empirically testable consequences (the Vine-Matthews-Morley hypothesis): if the field reversals were indeed global and if the sea floor was spreading, then irregularly patterned stripes running parallel to the ridges should match the patterns observed in geological formations on land. Until this prediction was corroborated, there was still little impetus to overthrow the dominant theory, but magnetic soundings of the Pacific-Antarctic Ridge in 1966, along with sea-floor core samples, revealed the expected polarity patterns and led, within the space of a year, to a near complete acceptance of drift hypotheses among earth scientists.

According to Giere, naturalism can avoid idealistic talk of researchers living “in different worlds” and explain the sudden revolution in geology by appealing only to a few very plausible assumptions about human psychology and social interaction – assumptions that are fully compatible with physicalism. These concern what he calls the “payoff matrix” for accepting one of the competing theories (393). Abandoning a pet theory is seldom satisfying, and the rejection of a widely held model is likely to upset many researchers, revealing their previous work as no longer relevant. Resistance to change is all too easily explained. However, humans also take satisfaction in being right, and scientists hope to be objectively right about those aspects of the world they investigate. This interest, as Giere points out, does not have to be considered “an intrinsic positive value” among scientists, for it is tempered by psychosocial considerations (393) such as the fear of being ostracized and the promise of rewards. The geo-theoretical options became clear – or emerged as vital rather than merely logical alternatives – with the articulation of a drift model with clearly testable consequences. We may surmise that researchers began weighing their options at this time, though it is not necessary to consider this a transparently conscious act of deliberation. What was essential was the wide agreement among researchers that the predictions regarding magnetic profiles, if verified, would be extremely difficult to square with a static earth model and compellingly simple to explain if drift really occurred. Sharing this basic assumption, the choice was easy when the relevant data came in (394).

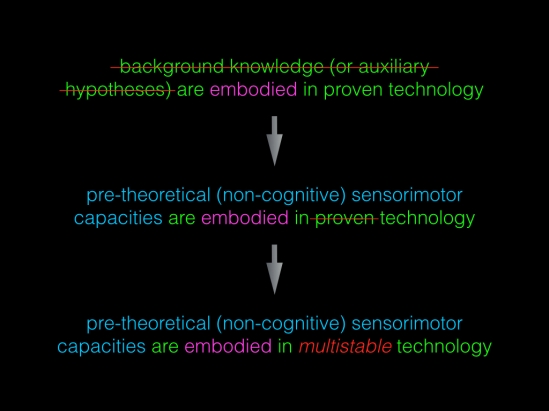

But the really interesting thing about this case, in my opinion, is the central role that technology played in structuring theoretical options and forcing a decision, which Giere notes but only in passing. The developing model first became truly relevant through the availability of technologies capable of confirming its predictions: technologies for conducting magnetic soundings of the ocean floor and for retrieving core samples from the deep. Indeed, the Vine-Matthews-Morley hypothesis depended on technology not only for its verification, but for its initial formulation as well: ocean ridges could not have been discovered without instruments capable of sounding the ocean floor, and the discovery of magnetic field reversals depended on a similarly advanced technological infrastructure. A reliance on mediating technologies is central to the practice of science, and Giere suggests that an appreciation of this fact helps distinguish naturalism from “methodological foundationism” or the notion that justified beliefs must recur ultimately to a firm basis in immediate experience (394). His account of the geological paradigm shift therefore “assumes agreement that the technology for measuring magnetic profiles is reliable. The Duhem-Quine problem [i.e. the problem that it is logically possible to salvage empirically disconfirmed theories by ad hoc augmentation] is set aside by the fact that one can build, or often purchase commercially, the relevant measuring technology. The background knowledge (or auxiliary hypotheses) are embodied in proven technology” (394). In other words, the actual practice of science (or technoscience) does not require ultimate justificational grounding, and the agreement on technological reliability ensures, according to Giere and contra Kuhn, that disagreeing parties still operate in the same world.

But while I agree that Giere’s description of the way technology is implemented by scientists is a plausible account of actual practice and its underlying assumptions, I question his extrapolation from the practical to the theoretical plane. With regard to technology, I contend, the circle problem resurfaces with a vengeance. As posed by the skeptic, Giere is right, in my opinion, to reject the circle argument as invalidating naturalism’s methodologically self-reflexive application of scientific theories to the theory of science. Our evolutionary history, I agree, genuinely militates against the skeptic’s requirement that we be able to provide grounds for all our beliefs; our survival depends upon an embodied knowledge that is presupposed by, and therefore not wholly explicatable to, our conscious selves. But as extensions of embodiment, the workings of our technologies are equally opaque to subjective experience, even – or especially – when they seem perfectly transparent channels of contact with the world. Indeed, Giere seems to recognize this when he says that “background knowledge (or auxiliary hypotheses) are embodied by proven technology” (394, emphasis added). In other words, scientists invest technology with a range of assumptions concerning “reliability” or, more generally, about the relations of a technological infrastructure to the natural world; their agreement on these assumptions is the enabling condition for technology to yield clear-cut decision-making consequences. Appearing neutral to all parties involved, the technology is in fact loaded, subordinated to human aims as a tool. Some such subordinating process seems, from a naturalistic perspective, unavoidable for embodied humans. However, agreement on technological utility – on both whether and how a technology is useful – is not guaranteed in every case. Moreover, it is not just a set of cognitive, theoretical assumptions (“auxiliary hypotheses”) with which scientists entrust technologies, but also aspects of their pre-theoretically embodied, sensorimotor competencies. Especially at this level, mediating technologies are open to what Don Ihde calls an experiential “multistability” – capable, that is, of instantiating to differently situated subjectivities radically divergent ways of relating to the world. But it is precisely the consensual stability of technologies that is the key to Giere’s contextualist rebuttal of “foundationism.”

Downplaying multistability is the condition for a general avoidance of the circle argument, for a pragmatic avoidance of idealism and/or skepticism. This, I believe, is most certainly the way things work in actual practice; (psycho)social-institutional pressures work to ensure consensus on technological utility. But does naturalism, self-reflexively endorsing science as the basis of its own theorization, then necessarily reproduce these pressures? Feminists in particular may protest on these grounds that the “nature” in naturalism in fact encodes the white male perspective historically privileged by science because embodied by the majority of practicing scientists. What I am suggesting is that the tacit, largely unquestioned processes by which technological multistability is tamed in practice form a locus for the inscription of social norms directly into the physical world; for in making technologies the material bearers of consensual values (whether political, epistemic, psychological, or even the animalistically basic preferability of pleasure over pain) scientific practice encourages certain modes of embodied relations to the world – not just psychic but material relations themselves embodied in technologies. It goes without saying that this can only occur at the expense of other modes of being-embodied.

More generally stated, the real problem with naturalism’s self-reflexivity is not that it fails to take skeptical challenges seriously or that it provides a false picture of actual scientific practice, but that in extrapolating from practice it locks certain assumptions about technological reliability into theory, embracing them as its own. While it is contextually – indeed physically – necessary that assumptions be made, and that they be embodied or exteriorized in technologies, the particular assumptions are contingent and non-neutral. This may be seen as a political problem, which it is, but it also more than that. It is, moreover, an ontological problem of the instability of nature itself – not just of nature as a construct but of the material co-constitution of real, flesh-and-blood organisms and their environments. Once we enter the naturalist circle – and I believe we have good reason to do so – we accept that evolution dislodges the primacy of place traditionally accorded human beings. At the same time, we accept that the technologies with which science has demonstrated the non-essentiality of human/animal boundaries are reliable, that they show us what reality is really, objectively like. This step depends, however, on a bracketing of technological multistability. If we question this bracketing, as I do, we seem to lose our footing in material objectivity. Nevertheless convinced that it would be wrong to concede defeat to the skeptic, we point out that adaptive knowledge’s circularity or contextualist holism is a necessary requirement of human survival, that it follows directly from embodiment and the fact that the underlying biological mechanisms “largely bypass our conscious experience and linguistic or conceptual abilities” (Giere 385). But if we admit that technological multistability really obtains as a fact of our phenomenal relations to the world, this holism seems to lead us back precisely to Kuhn’s idealist suggestion that researchers (or humans generally) may occupy incommensurably “different worlds.” If we don’t want to abandon materialism, then we have to find an interpretation of this idea that is compatible with physicalism.

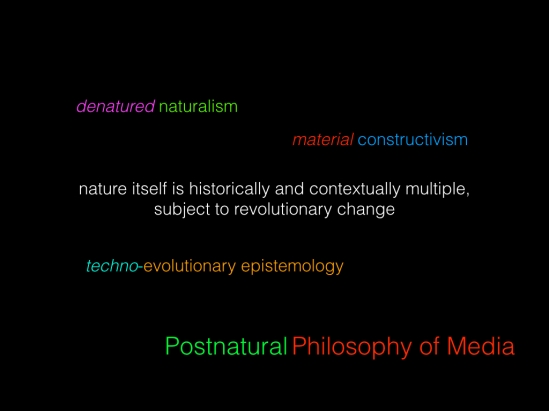

Indeed, it is the great merit of naturalism that it provides us with the means for doing so; however, it is the great failure of the theory that it neglects these resources. The failure, which consists in reproducing science’s subordination of technology to thought – in fact compounding the reduction, as contextually practiced, by subordinating it to an overarching (i.e. supra-contextual) theory of science – is truly necessary for naturalism, for to rectify its oversight of multistability is to admit the breakdown of a continuous nature itself. To consistently acknowledge the indeterminacy of human-technology-world relations and simultaneously maintain materialism requires, to begin with, that we extend Giere’s insight about biological mechanisms to specifically technological mechanisms of embodied relation to the world: they too “bypass our conscious experience and linguistic or conceptual abilities.” If we take the implications seriously, this means that technologies resist full conceptualization and are therefore potentially non-compliant with human (or scientific) aims; reliance on technology is not categorically different in kind from reliance on our bodies: both ground our practice and knowledge in the material world, but neither is fully recuperable to thought. Extending naturalism in this way means recognizing that not only human/animal but also human/technology distinctions are porous and non-absolute. But whereas naturalism tacitly assumes that the investment of technology with cognitive aims is only “natural” and therefore beyond question, the multistability of non-cognitive investments of corporeal capacities implies that there is more to the idea of “different worlds” than naturalism is willing or able to admit: on a materialistic reading, it is nature itself, and not just human thought or science, that is historically and contextually multiple, non-coherently splintered, and subject to revolutionary change. Serious consideration of technology leads us, that is, to embrace a denatured naturalism, a techno-evolutionary epistemology, and a material rather than social constructivism. This, then, is the basis for a postnatural philosophy of media.

Philosophy of Science De-Naturalized: Notes towards a Postnatural Philosophy of Media

I am very honored to have been invited to hold a keynote address at the Texas State University Philosophy Department’s annual philosophy symposium on April 4, 2014. Having studied as an undergraduate at Texas State (which back then was known as Southwest Texas State University, or SWT for short), this will be something of a homecoming for me, and I’m very excited about it!

In fact, one of the first talks I ever delivered was at the 1997 philosophy symposium — the very first year it was held. My talk back then, titled “Skepticism and the Cultural Critical Project,” sought to bridge the divide between, on the one hand, the analytical epistemology and philosophy of science that I was studying under the supervision of Prof. Peter Hutcheson and, on the other hand, the Continental-inspired literary and cultural theory to which I was being exposed by a young assistant professor of English, Mark B. N. Hansen (before he went off to Princeton, then University of Chicago, and now Duke University).

In a way, my effort back then to mediate between these two very different traditions has proved emblematic for my further academic career. For example, my dissertation looked at Frankenstein films as an index for ongoing changes in the human-technological relations that, I contend, continually shape and re-fashion us at a deeply material, pre-subjective, and extra-discursive level of our being. The cultural realm of monster movies was therefore linked to the metaphysical realm of what I call the anthropotechnical interface, and my argument was mounted by way of a lengthy “techno-scientific interlude” in which I revisited many of the topics in Anglo-American epistemology and philosophy of science that I had first thought about as an undergrad in Texas.

Thus, without my knowing it (and it’s really only now becoming clear to me), my talk back in 1997 marked out a trajectory that it seems I’ve been following ever since. And now it feels like a lot of things are coming full circle: A book based upon my dissertation, for which Mark Hansen served as reader, is set to appear later this year (but more on that and a proper announcement later…). In addition, as I announced here recently, I will be moving to North Carolina this summer to commence a 2-year postdoctoral fellowship at Duke, where I will be working closely with Hansen. Now, before that project gets underway, I have the honor to return to the philosophy symposium in San Marcos, Texas and, in a sense, to revisit the place where it all started.

I thought it would be appropriate, therefore, if I delivered a talk that continued along the trajectory I embarked upon there 17 years ago (wow, that makes me feel old…). My talk, titled “Philosophy of Science De-Naturalized: Notes towards a Postnatural Philosophy of Media,” takes a cue from Ronald N. Giere’s “Philosophy of Science Naturalized” — which sought to reconcile Thomas Kuhn’s idea of revolutionary paradigm shifts in the history of science with W. V. O. Quine’s notion of “Epistemology Naturalized,” i.e. a theory of knowledge based more in the material practice and findings of natural science (especially evolutionary biology) than in the “rational reconstruction” of ideal grounds for justified true belief. As I will show, my own “postnaturalism” — which is ultimately a philosophy of media rather than of knowledge or science — represents not so much a break with such naturalism as a particular manner of thinking through issues of technological mediation that emerge in that context, issues that I then subject to phenomenological scrutiny and ultimately post-phenomenological transformations in order to arrive at a theory of anthropotechnical interfacing and change.

CFP: Digital Seriality — Special Issue of Eludamos: Journal for Computer Game Culture

I am pleased to announce that my colleague Andreas Jahn-Sudmann and I will be co-editing a special issue of Eludamos: Journal for Computer Game Culture on the topic of “Digital Seriality.” Here, you’ll find the call for papers (alternatively, you can download a PDF version here). Please circulate widely!

Call for Papers: Digital Seriality

Special Issue of Eludamos: Journal for Computer Game Culture (2014)

Edited by Shane Denson & Andreas Jahn-SudmannAccording to German media theorist Jens Schröter, the analog/digital divide is the “key media-historical and media-theoretical distinction of the second half of the twentieth century” (Schröter 2004:9, our translation). And while this assessment is widely accepted as a relatively uncontroversial account of the most significant media transformation in recent history, the task of evaluating the distinction’s inherent epistemological problems is all the more fraught with difficulty (see Hagen 2002, Pias 2003, Schröter 2004). Be that as it may, since the 1990s at the latest, virtually any attempt to address the cultural and material specificity of contemporary media culture has inevitably entailed some sort of (implicit or explicit) evaluation of this key distinction’s historical significance, thus giving rise to characterizations of the analog/digital divide as caesura, upheaval, or even revolution (Glaubitz et al. 2011). Seen through the lens of such theoretical histories, the technical and especially visual media that shaped the nineteenth and twentieth centuries (photography, film, television) typically appear today as the objects of contemporary digitization processes, i.e. as visible manifestations (or remnants) of a historical transition from an analog (or industrial) to a digital era (Freyermuth and Gotto 2013). Conversely, despite its analog pre-history today’s digital computer has primarily been addressed as the medium of such digitization processes – or, in another famous account, as the end point of media history itself (Kittler 1986).

The case of digital games (as a software medium) is similar to that of the computer as a hardware medium: although the differences and similarities between digital games and older media were widely discussed in the context of the so-called narratology-versus-ludology debate (Eskelinen 2001; Juul 2001; Murray 1997, 2004; Ryan 2006), only marginal attention was paid in these debates to the media-historical significance of the analog/digital distinction itself. Moreover, many game scholars have tended to ontologize the computer game to a certain extent and to treat it as a central form or expression of digital culture, rather than tracing its complex historical emergence and its role in brokering the transition from analog to digital (significant exceptions like Pias 2002 notwithstanding). Other media-historiographical approaches, like Bolter and Grusin’s concept of remediation (1999), allow us to situate the digital game within a more capacious history of popular-technical media, but such accounts relate primarily to the representational rather than the operative level of the game, so that the digital game’s “ergodic” form (Aarseth 1999) remains largely unconsidered.

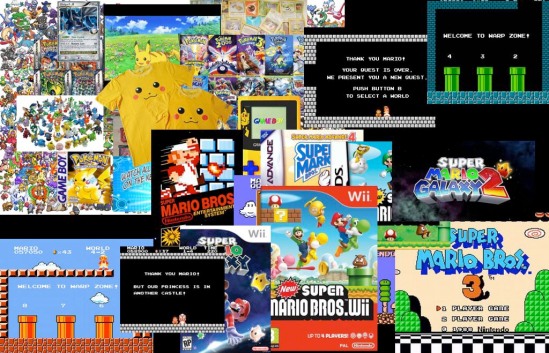

Against this background, we would like to suggest an alternative angle from which to situate and theorize the digital game as part of a larger media history (and a broader media ecology), an approach that attends to both the representational level of visible surfaces/interfaces and the operative level of code and algorithmic form: Our suggestion is to look at forms and processes of seriality/serialization as they manifest themselves in digital games and gaming cultures, and to focus on these phenomena as a means to understand both the continuities and the discontinuities that mark the transition from analog to digital media forms and our ludic engagements with them. Ultimately, we propose, the computer game simultaneously occupies a place in a long history of popular seriality (which stretches from pre-digital serial literature, film, radio, and television, to contemporary transmedia franchises) while it also instantiates novel forms of a specifically digital type of seriality (cf. Denson and Jahn-Sudmann 2013). By grappling with the formal commensurabilities and differences that characterize digital games’ relations to pre-digital (and non-ludic) forms of medial seriality, we therefore hope to contribute also to a more nuanced account of the historical process (rather than event) of the analog/digital divide’s emergence.

Overall, seriality is a central and multifaceted but largely neglected dimension of popular computer and video games. Seriality is a factor not only in explicitly marked game series (with their sequels, prequels, remakes, and other types of continuation), but also within games themselves (e.g. in their formal-structural constitution as an iterative series of levels, worlds, or missions). Serial forms of variation and repetition also appear in the transmedial relations between games and other media (e.g. expansive serializations of narrative worlds across the media of comics, film, television, and games, etc.). Additionally, we can grasp the relevance of games as a paradigm example of digital seriality when we think of the ways in which the technical conditions of the digital challenge the temporal procedures and developmental logics of the analog era, e.g. because once successively appearing series installments are increasingly available for immediate, repeated, and non-linear forms of consumption. And while this media logic of the database (cf. Manovich 2001: 218) can be seen to transform all serial media forms in our current age of digitization and media convergence, a careful study of the interplay between real-time interaction and serialization in digital games promises to shed light on the larger media-aesthetic questions of the transition to a digital media environment. Finally, digital games are not only symptoms and expressions of this transition, but also agents in the larger networks through which it has been navigated and negotiated; serial forms, which inherently track the processes of temporal and historical change as they unfold over time, have been central to this media-cultural undertaking (for similar perspectives on seriality in a variety of media, cf. Beil et al. 2013, Denson and Mayer 2012, Jahn-Sudmann and Kelleter 2012, Kelleter 2012, Mayer 2013).

To better understand the cultural forms and affective dimensions of what we have called digital games’ serial interfacings and the collective serializations of digital gaming cultures (cf. Denson and Jahn-Sudmann 2013), and in order to make sense of the historical and formal relations of seriality to the emergence and negotiation of the analog/digital divide, we seek contributions for a special issue of Eludamos: Journal of Computer Game Culture on all aspects of game-related seriality from a wide variety of perspectives, including media-philosophical, media-archeological, and cultural-theoretical approaches, among others. We are especially interested in papers that address the relations between seriality, temporality, and digitality in their formal and affective dimensions.

Possible topics include, but are not limited to:

- Seriality as a conceptual framework for studying digital games

- Methodologies and theoretical frameworks for studying digital seriality

- The (im)materiality of digital seriality

- Digital serialities beyond games

- The production culture of digital seriality

- Intra-ludic seriality: add-ons, levels, game engines, etc.

- Inter-ludic seriality: sequels, prequels, remakes

- Para-ludic seriality: serialities across media boundaries

- Digital games and the limits of seriality

******************************************************************************

Paper proposals (comprising a 350-500 word abstract, 3-5 bibliographic sources, and a 100-word bio) should be sent via e-mail by March 1, 2014 to the editors:

- a.sudmann[at]fu-berlin.de

- shane.denson[at]engsem.uni-hannover.de

Papers will be due July 15, 2014 and will appear in the fall 2014 issue of Eludamos.

*******************************************************************************

References:

Aarseth, Espen. 1999. “Aporia and Epiphany in Doom and The Speaking Clock: The Temporality of Ergodic Art.” In Marie-Laure Ryan, ed. Cyberspace Textuality: Computer Technology and Literary Theory. Bloomington: Indiana University Press, 31–41.

Beil, Benjamin, Lorenz Engell, Jens Schröter, Daniela Wentz, and Herbert Schwaab. 2012. “Die Serie. Einleitung in den Schwerpunkt.” Zeitschrift Für Medienwissenschaft 2 (7): 10–16.

Bolter, J. David, and Richard A, Grusin. 1999. Remediation: Understanding New Media. Cambridge, Mass.: MIT Press.

Denson, Shane, and Andreas Jahn-Sudmann. “Digital Seriality: On the Serial Aesthetics and Practice of Digital Games.” Eludamos. Journal for Computer Game Culture 1 (7): 1-32. http://www.eludamos.org/index.php/eludamos/article/view/vol7no1-1/7-1-1-html.

Denson, Shane, and Ruth Mayer. 2012. “Grenzgänger: Serielle Figuren im Medienwechsel.” In Frank Kelleter, ed. Populäre Serialität: Narration – Evolution – Distinktion. Zum seriellen Erzählen seit dem 19. Jahrhundert. Bielefeld: Transcript, 185-203.

Eskelinen, Markku. 2001. “The Gaming Situation” 1 (1). http://www.gamestudies.org/0101/eskelinen/.

Freyermuth, Gundolf S., and Lisa Gotto, eds. 2012. Bildwerte: Visualität in der digitalen Medienkultur. Bielefeld: Transcript.

Glaubitz, Nicola, Henning Groscurth, Katja Hoffmann, Jörgen Schäfer, Jens Schröter, Gregor Schwering, and Jochen Venus. 2011. Eine Theorie der Medienumbrüche. Vol. 185/186. Massenmedien und Kommunikation. Siegen: Universitätsverlag Siegen.

Hagen, Wolfgang. 2002. “Es gibt kein ‘digitales Bild’: Eine medienepistemologische Anmerkung.” In: Lorenz Engell, Bernhard Siegert, and Joseph Vogl, eds. Archiv für Mediengeschichte Vol. 2 – “Licht und Leitung.” München: Wilhelm Fink Verlag, 103–12.

Jahn-Sudmann, Andreas, and Frank Kelleter. “Die Dynamik Serieller Überbietung: Zeitgenössische Amerikanische Fernsehserien und das Konzept des Quality TV.” In Frank Kelleter, ed. Populäre Serialität: Narration – Evolution – Distinktion. Zum seriellen Erzählen seit dem 19. Jahrhundert. Bielefeld: Transcript, 205–24.

Juul, Jesper. 2001. “Games Telling Stories? – A Brief Note on Games and Narratives.” Game Studies 1 (1). http://www.gamestudies.org/0101/juul-gts/.

Kelleter, Frank, ed. 2012. Populäre Serialität: Narration – Evolution – Distinktion: Zum seriellen Erzählen seit dem 19. Jahrhundert. Bielefeld: Transcript.

Kittler, Friedrich A. 1986. Grammophon, Film, Typewriter. Berlin: Brinkmann & Bose.

Manovich, Lev. 2001. The Language of New Media. Cambridge, Mass.: MIT Press.

Mayer, Ruth. 2013. Serial Fu Manchu: The Chinese Supervillain and the Spread of Yellow Peril Ideology. Philadelphia: Temple University Press.

Murray, Janet H. 1997. Hamlet on the Holodeck: The Future of Narrative in Cyberspace. Cambridge: MIT Press.

Murray, Janet H. 2004. “From Game-Story to Cyberdrama.” In Noah Wardrip-Fruin and Pat Harrigan, eds. First Person: New Media as Story, Performance, and Game. Cambridge, MA: MIT Press, 2-10.

Pias, Claus. 2002. Computer Spiel Welten. Zürich, Berlin: Diaphanes.

Pias, Claus. 2003. “Das digitale Bild gibt es nicht. Über das (Nicht-)Wissen der Bilder und die informatische Illusion.” Zeitenblicke 2 (1). http://www.zeitenblicke.de/2003/01/pias/.

Ryan, Marie-Laure. 2006. Avatars of Story. Minneapolis: University of Minnesota Press.

Schröter, Jens. 2004. “Analog/Digital – Opposition oder Kontinuum?” In Jens Schröter and Alexander Böhnke, eds. Analog/Digital – Opposition oder Kontinuum? Beiträge zur Theorie und Geschichte einer Unterscheidung. Bielefeld: Transcript, 7–30.

Metabolic Images

This Saturday, February 1, 2014, I’ll be taking another stab at the notion of “metabolic images,” which I’ve started developing in recent talks. My talk will take place at the University of Cologne in the context of a series of workshops titled (after Isabelle Stengers) “Ecologies of Practice: Media, Art, Literature,” organized by Reinhold Görling (Heinrich-Heine-Universität Düsseldorf), Marie-Luise Angerer (Kunsthochschule Medien Köln), and Hanjo Berressem (Universität zu Köln). Here is the abstract for the talk:

Metabolic Images

Shane Denson, Leibniz Universität Hannover

With the shift to a digital and more broadly post-cinematic media environment, moving images have undergone what I term their “discorrelation” from human embodied subjectivities and (phenomenological, narrative, and visual) perspectives. Clearly, we still look at – and we still perceive – images that in many ways resemble those of a properly cinematic age; yet many of these images are mediated in ways that subtly (or imperceptibly) undermine the distance of perspective, i.e. the spatial or quasi-spatial distance and relation between phenomenological subjects and the objects of their perception. At the center of these transformations are a set of strangely volatile mediators: post-cinema’s screens and cameras, above all, which serve not as mere “intermediaries” (in Latour’s terms) that would relay images neutrally between relatively fixed subjects and objects but which act instead as transformative, transductive “mediators” of the subject-object relation itself. In other words, digital and post-cinematic media technologies do not just produce a new type of image; they establish entirely new configurations and parameters of perception and agency, placing spectators in an unprecedented relation to images and the infrastructure of their mediation.

The transformation at stake here pertains to a level of being that is therefore logically prior to perception, as it concerns the establishment of a new material basis upon which images are produced and made available to perception. Accordingly, a phenomenological and post-phenomenological analysis of post-cinematic images and their mediating cameras points to a break with human perceptibility as such and to the rise of a fundamentally post-perceptual media regime. In an age of computational image production and networked distribution channels, media “contents” and our “perspectives” on them are rendered ancillary to algorithmic functions and become enmeshed in an expanded, indiscriminately articulated plenum of images that exceed capture in the form of photographic or perceptual “objects.” That is, post-cinematic images are thoroughly processual in nature, from their digital inception and delivery to their real-time processing in computational playback apparatuses; furthermore, and more importantly, this basic processuality explodes the image’s ontological status as a discrete packaged unit, and it insinuates itself – as I will argue – into our own microtemporal processing of perceptual information, thereby unsettling the relative fixity of the perceiving human subject. Post-cinema’s cameras thus mediate a radically nonhuman ontology of the image, where these images’ discorrelation from human perceptibility signals an expansion of the field of material affect: beyond the visual or even the perceptual, the images of post-cinematic media operate and impinge upon us at what might be called a “metabolic” level.

Mediatization and Serialization

The very first thing I posted on this blog (in May 2011) was the above flyer, announcing a talk I was giving on the connections between “mediatization” and “serialization.” While sorting through some papers, I came across the text of the talk again and realized I still haven’t gotten around to doing anything with it. In the end, I have to admit that the concept of mediatization, as defined by the media sociologists and communications theorists I discuss in the talk, doesn’t really appeal to me that much. For one thing, I am much less concerned than they are to guard against charges of technological determinism; mediatization theory often seems preoccupied with keeping the human, or the social, in control (on some general problems with this preoccupation, see McKenzie Wark’s recent essay “Against Social Determinism”). However, a large part of the theoretical appeal of the notion of mediatization — as a process of change closely linked with processes like modernization and globalization — lies in its description of an apparent loss of autonomy, a ceding of human agency to the technical. And I think there’s something to be said for this: the past two-hundred some-odd years have witnessed an explosion of technical actants, placing us in ever more complex and opaque feedback loops with them and the environment they mediate to us: welcome to the anthropocene…

So this is not my problem with mediatization theory. Rather, my problem is with the insinuation that human agency was free of the taint of media or technics some two or three hundred (or however many) years ago, and that it only gradually became “mediatized.” My own notion of postnaturalism, summed up in the Latourian paraphrase that “we have never been natural,” is based on the idea of an essential and indissoluble (though by no means static) “anthropotechnical interface” that connects human and technical agencies in a transductive relation — there simply is no human agency without technical agencies, and vice versa. Still, it is necessary to take note of the empirical changes that take place against this cosmological horizon, and perhaps the notion of mediatization can be of service in this regard after all. If we set aside the worries over determinism, that is, and train our focus at a medium level of abstraction — somewhere between the cosmological and the phenomenologically/technically concrete and individual: at the level of supra-personal but not quite geological temporality or history — perhaps then an engagement with the concept of “mediatization” can help us think through the qualitative changes in agency that have taken place with the advent of the steam press, photography, film, radio, television, and digital media.

My talk on “Mediatization & Serialization” certainly does no more than scratch the surface in this regard, but in the hopes that it at least manages to do that, I have decided to reproduce the text here. As always, I am grateful for any comments!

Mediatization & Serialization

Shane Denson

(Note: This is a rough script of the talk I held at Leibniz Universität Hannover on May 18, 2011. Bibliographical info is missing, and footnotes are just placeholders.)

[Intro]

What I hope to do is to bring the concept of mediatization, which I’ll explain shortly, into dialogue with that of serialization, especially as it pertains to the seriality of modern popular entertainment (as exhibited in film and television series, for example). Most generally, the basis for this dialogue derives from the fact that both mediatization and serialization are, or so I contend, characteristically modern processes, perhaps even central to modernity itself. Mediatization, according to the term’s usage in recent communications theory and media sociology, is related to the fact that the modern world is witness to a consistent increase in the sheer number of technical media. These, in turn, are seen to be increasingly central to the shape and structure of sociocultural reality, so that it becomes increasingly untenable to think of media as a separate institution, exerting pressure on the others from outside. Instead, the situation shifts to one in which we find genuinely “mediatized” institutions, institutions transformed by media as an increasingly integral framing structure. So it no longer makes sense to speak of “the media and education” or “the media and the family,” as in traditional mass communications studies, but instead of thoroughly mediatized education, family, and so forth. Mediatization, according to Friedrich Krotz, is a “meta-process,” not itself empirically observable but, like the meta-processes of individualization, commercialization, and globalization, marking a real trajectory in the modern world and a force that qualitatively conditions the realm of empirically observable phenomena. In some respects, this view of mediatization as a meta-process might be compared to Friedrich Kittler’s idea of “media a priori,” but (against this association) it is constantly emphasized in these discussions that mediatization is to be seen as a non-deterministic process. As for serialization, this refers, like I said, to the popular seriality that explodes onto the scene in the nineteenth century with the advent of new media technologies (like the steam press, which enabled the rapid production of printed periodicals, including story papers, penny dreadfuls, and dime novels). Since then, serialized narrative forms have continued to proliferate alongside and in the media that would seem to have changed our worlds into properly mediatized worlds: in film, radio, television, and now digital media. The serialization of entertainment cannot, it would seem, be thought in isolation from the processes described by the concept of mediatization, and serialization may be thought of as a special case of the mediatization of leisure time, specifically as relates to media-inflected transformations of popular narrative. On the other hand, though, serialization is a truly special case of mediatization, in that it is a highly self-reflexive process: serial narratives observe changes in mediation, track them over time, and thus offer images of mediatization processes as they unfold—in “real time,” so to speak. Careful attention to serialization promises therefore to shed light on mediatization—and vice versa. What I call “techno-phenomenology,” and which I will come back to shortly, will help uncover the bidirectional communication between the two processes.

[Framing mediatization through serialization]

But first, to start making these connections concrete, I turn to some ideas and observations put forward by Frank Kelleter in a recent issue of Psychologie Heute. Kelleter notes that the evolution of TV sitcoms from the 1950s to today displays a continuous movement from the intact family as the site and occasion for humor, by way of the dysfunctional units of All in the Family or Married…with Children, to more recent sitcoms that revolve around a group of unrelated friends (as in Seinfeld, Friends, or Sex and the City). As Kelleter remarks, this development can be seen as a result of, and a reflection of, the increasing individualization of modern Western societies. Individualization, you recall, is one of the central meta-processes that, according to Friedrich Krotz, define modernity, along with the interrelated meta-processes of commercialization, globalization, and mediatization. And surely we can see these other processes reflected just as clearly in the globally syndicated, made-for-profit series that dominate TV screens today. But, as Kelleter recognizes, the reflection of social reality is only the beginning, and it would be shortsighted to reduce the link between serialization and mediatization to a passive commentary function that series can assume with respect to social developments.

On the contrary, serialized television actively frames our experience of the world: the week is punctuated at regular intervals by our favorite series, and our relations at home and on the job are perceived—though not uncritically—through the lenses of television drama, sitcoms, soaps, news, and police procedurals. Just as we judge series as either realistic or unrealistic, we inevitably compare reality and the people we encounter in the “real world” with the models we have met and occasionally become intimate with in the serialized world of television. In this respect, we can speak of a relatively direct (though hardly simple[1]) link between serialization and mediatization, as the serialized entertainment of TV transformatively reframes our interpersonal relations and behavioral expectations. Moreover, this activity of framing and reframing is itself increasingly the object of serial entertainment. The serial production and distribution of still incomplete narrative constructs opens a space for what Henry Jenkins calls “participatory culture,” and new social relations are created in the feedback loops between producers and consumers—social relations that are not only transformed but in fact generated through mediatization. And the media processes upon which these novel relations depend are increasingly the topic not only of fans’ discourses with one another but also of the serial productions themselves, which in the era of so-called Quality TV are aware of the existence of a well-informed, highly networked, and hardly passive fan base. Fans know how series are structured, and series’ producers know that fans know how they’re structured, and so the series themselves become increasingly complex and self-reflexive in response. The result is that the serialized communication that takes place in the inherently mediatized networks of producers and consumers of serial forms continues to proliferate in the manner of a self-serialization that takes as its object the serial maintenance of mediatized relations and communications in and through series. (And that only approximates the complex self-reflexive processes set in motion by a series like Lost.)

But while I’ve concentrated on recent television here, the link between serialization and mediatization is much broader. A moment ago, I invoked the figure of the frame to describe the work of regularly consumed TV series in organizing and transforming our experience, and I want to extend this to serial forms in other media as well. The figure of the frame has cropped up before in discussions of mediatization, often in connection with the idea of an overarching “media logic” (as the “orientation frame” or “processual framework” that increasingly structures social action).[2] But whereas this notion of media logic raises worries of determinism and is seen by some to cast mediatization as an implausibly linear process,[3] the sort of framing I have in mind is inherently reversible, volatile, and polyvocal—expressive of the non-neutral Prägkraft or “moulding forces” that communications scholar Andreas Hepp sees at work in media, while also compatible with his call (following David Morley) for a non-media-centric media theory capable of countenancing a central paradox of mediatization: capable, namely, of accounting for the increasingly central role of media in shaping our experience without thereby attributing the central or determinative causal agency of this development to media as a unified force. Divorced from the idea of a singular, teleological media logic, the figure of the frame illustrates what I call a process of “de/centering,” which Derrida exposes in a text ostensibly about painting. As he shows, frames are never simple or singular but highly unstable and phenomenally reversible entities. On the one hand, they stand outside the work, providing a background against which the framed content can emerge as a figure. On the other hand, though, the frame becomes part of the figure when seen against the background of the wall. Oscillating between ground and figure, the frame as margin or passepartout both centers and decenters the work: it enacts the multistable logic of de/centering that I propose explains the non-passive but non-deterministic framing function of media as revealed by a techno-phenomenological take on mediatization and serialization.

[Techno-phenomenology]

Techno-phenomenology is my term for an approach developed by American philosopher Don Ihde, following leads from the early Heidegger and Merleau-Ponty, among others. Generally speaking, techno-phenomenology looks at technologies neither as passive artifacts nor as determinative global systems, but instead as constituent parts of the relations that human agents maintain with their environments in concretely embodied, practical situations. What Ihde calls “mediating technologies” may occupy a variety of positions within the intentional relations of subjects to objects. For example, when one looks through a telescope, the device itself ideally disappears; it is, so to speak, “absorbed” into the perceiving subject’s sensorial apparatus to reveal far-away objects that couldn’t be seen with the naked eye. Prosthetically extending the embodied subject, the telescope occupies what Ihde calls an “embodiment relation.” In contrast to this, a radio telescope cannot be looked through in this direct manner; instead, its output must be looked at and interpreted, “read” as a sign of an objective reality that cannot be perceived directly. This type of technology instantiates a “hermeneutic relation”—occupying a semi-objective position as something to be looked at rather than through. But it is important to note that technologies, on this view, do not inevitably and irrevocably instantiate one or the other type of relation. As Heidegger’s famous hammer illustrates, an embodiment relation can always break down. And Don Ihde goes further to illustrate that breakage is not the only source of such reversal. Decontextualization, simple inexperience, or intentional aesthetic estrangement, for example, can all cause transformation, because techno-phenomenal relations are embedded in contexts of practice, which are in turn conditioned by social and cultural forces. The fact remains, though, that a “telic inclination,” as Ihde puts it, may inhere in a technology, predisposing a given technology to certain means of use and relation and not to others. Material factors exert pressures on praxis that push optical and radio telescopes, for example, to opposite ends of the spectrum of subject-object relations, while still allowing for non-typical forms of use or appropriation.

What goes for Ihde’s “mediating technologies” applies to media in a narrower sense as well. On a very general level, for example, we might say that textual media tend, for obvious reasons, towards hermeneutic relations, while the television screen tends to be looked through, rather than at, as a quasi-transparent window on the world. These are telic inclinations of the media—differential media logics in the plural, if you like—quite comparable to the non-absolute “tendencies” or “pressures” that Hepp terms the “moulding forces” of media. These forces, looked at from a techno-phenomenological perspective, can be seen to frame the perceptual and actional agencies of media users, but never in a univocal, absolute, or determinative manner. Significantly, contextual reversals remain a live possibility, as is demonstrated by the paradigmatic difference between early and so-called classical film. In the early years of the cinema, which Tom Gunning has defined as a “cinema of attractions” (1895-1905), the cinema itself was the main attraction; people went to see projection apparatuses, not films. But the display of cinematic magic and trick effects for their own sake came to be subordinated to narration, and the classical Hollywood style, which took shape by around 1917, worked to ensure the invisibility of narrative construction, rendering the apparatus of film a transparent window onto fictional worlds. Importantly, though, narrative serialization—in the form of film serials such as The Perils of Pauline, The Hazards of Helen, or The Exploits of Elaine—arose in the 1910s as a means of navigating the uncertain transitional phase between the early and classical paradigms. Staging neither the pure media spectacles of early attractions-style cinema nor the self-enclosed diegetic universes of classical film, these serialized story films vacillated between medial transparency and opacity, between narrative closure and an openness onto the non-diegetic conditions of their storytelling—both due to the incompleteness of the weekly episodes, which were segmented by cliffhangers and interrupted by the rhythms of the work week, and due to self-reflexive tendencies by which the serials ostentatiously demonstrated the medial means of an emerging form of narrative construction.[4]

[The nexus]

This brings me to the nexus that, as I see it, binds popular seriality and mediality. By mediality, I mean the fact and specific character or quality of a given process of mediation. Roger Hagedorn points to a special relation between seriality and mediality when he observes that serial narratives often “serve to promote the medium in which they appear” (5). Medial self-reflexivity is, then, in a sense a natural facet of the serial form’s role in helping “to develop the commercial exploitation of a specific medium” (5). The serialized novels of the nineteenth century feuilleton helped sell newspapers, and color comic strips advertised newly developed four-color printing processes. Early radio and television series served to attract consumers to the new media, to induce them to purchase expensive devices, and then to hook them with ongoing stories and recurring entertainments. Popular seriality is thus closely linked with the development of modern media, or with a media modernity characterized by constant pressure towards media-technological innovation. Serialization is correlated, that is, with the quantitative and qualitative changes which lay the very groundwork for the meta-process of mediatization. Concerned with their own medial forms, as well as the transformation of the media landscape implied by the emergence of new, competing media, series probe, expose, demonstrate, and experiment with their own mediality and compare various media with one another; moreover, because series unfold over time, they are capable of tracking the medial processes and changes upon which the meta-process of mediatization depends or supervenes.

Especially at transitional moments of media change, a techno-phenomenological perspective on seriality reveals a privileged view of the processes that are basic to mediatization; conversely, the concept of mediatization illuminates the lower-level work of serialization in the broader perspective of modernity. For example, late nineteenth century dime novels can be approached as medially inconspicuous channels through which simple, formulaic stories of frontier heroes, urban detectives, and young inventors were told; but when we see these tales in relation to the innovations in print technologies that made their production possible, to the transcontinental rail systems upon which their distribution relied, and to the increasingly urbanized settings in which their readers lived and worked, then the serially implemented locomotives, telegraphs, and other communication and transportation technologies that fill the pages of these stories seem to belong less to their narrative worlds than to the extra-diegetic machinery of their mediation and consumption. In techno-phenomenological terms, we find here a radical ambivalence between the transparency of embodiment relations and the medial opacity of hermeneutic relations. The serial form, which oscillates formally between repetition and variation, stages lifeworld changes in this unstable or “de/centered” manner and discloses thereby the underlying mechanics of mediatization in the process of its occurrence.

And because the specific periodicity of serialized forms can vary widely, ranging from daily to weekly to longer-term intervals, the perspective that series offer on mediatization varies accordingly. In addition to linear or episodic series that unfold within a single medium over a short period of time, there are also plurimedial series that may be staged over the course of decades. A serially staged figure such as Frankenstein’s monster constitutes such a series: originating in a novel, appearing numerous times on theater stages, achieving iconic form on film, and continuing to proliferate in comics, on TV, and in video games, the monster tracks virtually the entire course of modern media history. And it thrives particularly at moments of media change, such as the sound-film transition that ironically gave birth to Karloff’s mute monster. Robbed of the articulate speech acquired by the monster of the novel, the monster of the movies briefly served to highlight the fact and the eerie quality of sound film’s novel mediality, exemplifying the reversible logic of the frame by foregrounding the medial infrastructure over the mediated narrative. This reversal of figure and ground operated on the basis of the monster’s serial staging, on the basis of a preexisting familiarity and recognizability rendered strange in the new medium. With the habituation of sound film, the strangeness wore off and the iconic monster came to serve as the baseline for an ongoing serialization process. Here, if we look carefully, we can see the procession of major and minor media transformations that have made our world a properly mediatized one.

Finally, we might recognize here a meta-serial development, a historical shift in the constitution of serial forms, which provides a sort of wide-angle view of mediatization, as if through the wrong end of a telescope. In the procession from linear storytelling in a single medium, to the serialized proliferation of narratives repeated and varied in a fragmented plurimedial frame (such as is embodied by Frankenstein’s monster), to the recent advent of transmedial storytelling in the wake of a digitally induced media convergence, we see a long-term transformation of serial forms that speaks to an experience of progressive deterritorialization. Serially recurrent characters became dislodged from their material ecospheres, i.e. from the media in which they were born, through the proliferation of competing medial forms that vie for our attention. Likewise, we have been uprooted or liberated (depending on your perspective) from our immediate surroundings and, through these very media, been put in touch with distant, spatially nonlocal, communities. But a de/centered view of this development is neither linear nor certain in its assessment of the outcome. Transmedial storytelling continues the trend of displacement with respect to a singular or stable medial framework, but it reverses the diegetic fragmentation exemplified by Frankenstein’s monster and other serial icons of the twentieth century. Today, in series that span the media of television, film, print, and digital media, we find new tendencies toward reterritorialization, staged, though, as a complex and hardly straightforward affair. There is a renewed interest in creating unified diegetic worlds, in spite of or precisely because of the multiplicity of medial frames somewhat euphemistically united in talk of convergence. It is unclear what this says about us and our contemporary serialized experience of mediatization, but I think we can rule out the idea that today’s transmedial series simply “reflect” social reality. Instead, they frame our experience in a de/centered manner that both displays and enacts a central paradox of our mediatized worlds: media are increasingly central in structuring our experience, but there is a reversible margin from which these structures remain open to negotiation. As I have tried to show, this reversibility is essential to the process of serialization, thus ensuring the continued importance of popular seriality as a site of the non-deterministic production of a mediatized modernity.

[1] Indeed, complexification is central to Kelleter’s argument, which is also sensitive to the self-reflexive functions of serialization which I exploit in this paper. See also Jason Mittell on “narrative complexity.”

[2] The notion of the frame, popular of late in a variety of discourses and disciplines, also crops up regularly in discussions of mediatization, and I think a great deal hinges on our understanding and use of the concept. For example, in an essay that approaches mediatization in terms of the “institutionalization of media logic” (42), Andrea Schrott invokes the figure of the frame to explain this media logic, which she describes as an “orientation frame” (48) that is non-neutral with respect to content. This accords with Altheide and Snow’s own description of media logic, in their 1979 book of that title, as “a processual framework through which social action occurs” (15). Similarly, Norm Friesen and Theo Hug invoke the notion of “framing” to signal the “epistemological orientation” increasingly provided by media (80), or what they call “the role of the mediatic a priori” (79).

[3] Nick Couldry’s worry.

[4] Moreover, formal changes are coupled with social changes (gender, class), and these are highly relevant to a study of the mediatization of entertainment or of leisure time, as well as (and correlated with) a mediatization of identity or social identification. With respect to the specific example of transitional-era film serials (and serial-queen melodramas), see my “The Logic of the Line Segment: Continuity and Discontinuity in the Serial-Queen Melodrama” (forthcoming in Serialization in Popular Culture, edited by Robert Allen and Thijs van den Berg, New York: Routledge, 2014).

Demon Debt

I am pleased to announce that on Friday, January 17, 2014 (12:00 pm in room 609, Conti-Hochhaus), Prof. Julia Leyda from Sophia University in Tokyo will be giving a talk on “Demon Debt: Paranormal Activity as Recessionary Post-Cinematic Allegory.” The lecture will take place in the context of my seminar on 21st-century film, but attendance is open to all.

Julia Leyda has participated in the two roundtable discussions on “post-cinematic affect” that have appeared to date in the pages of La Furia Umana, and she served as respondent at SCMS 2013 on a panel that included papers by Steven Shaviro, Therese Grisham, and myself. Among other projects, she is currently collaborating with me on the preparation of an edited collection on “post-cinematic theory” that we hope to see published in 2014! (More details soon…)

Here is the abstract for her talk in January:

Demon Debt: Paranormal Activity as Recessionary Post-Cinematic Allegory

Julia Leyda

The Paranormal Activity film franchise serves as a case study in twenty-first-century neoliberal post-cinema. The demon in the Paranormal films comes to claim a debt resulting from a contract with an ancestor, who has in a sense “mortgaged” her future offspring in exchange for power and wealth; the demon here is an allegory of debt under capitalism, invisible, conveyed through digital media, and inescapable. Set entirely inside feminine spaces of the home — bedroom, kitchen, and nursery — the films construct a post-feminist narrative that reconfigures the gender politics of horror cinema. But the post-cinematic moment also demands analysis of form in addition to a thematic reading. Digital data constitutes the “film” itself in the form of video footage, like transnational finance capital and the intangible systems of consumer credit, and like the unseen and immaterial demon. The incursion of debtor capitalism and financialization into the home in these films has turned deadly. Finally, like the demonic home invasion, the financialization of private life drafts the immaterial labor of the audience into the branding of the film.

Nonhuman Perspectives and Discorrelated Images in Post-Cinema

As I mentioned recently, I will be speaking next week at the Post-Cinematic Perspectives conference taking place November 22-23, 2013 at the Free University Berlin. Below you’ll find the abstract for my talk:

Nonhuman Perspectives and Discorrelated Images in Post-Cinema

Shane Denson

With the shift to a digital and more generally post-cinematic media environment, moving images have undergone what I term their “discorrelation” from human embodied subjectivities and (phenomenological, narrative, and visual) perspectives. Clearly, we still look at – and we still perceive – images that in many ways resemble those of a properly cinematic age; yet many of these images are mediated in ways that subtly (or imperceptibly) undermine the distance of perspective, i.e. the quasi-spatial distance and relation between phenomenological subjects and the objects of their perception. At the center of these transformations are a set of strangely irrational mediators and “crazy” cameras – physical and virtual imaging apparatuses that seem not to know their place with respect to diegetic and nondiegetic realities, and that therefore fail to situate viewers in a coherently designated spectating-position. A phenomenological and post-phenomenological analysis of such mediating apparatuses points to the rise of a fundamentally post-perceptual media regime, in which “contents” and “perspectives” are ancillary to algorithmic functions and enmeshed in an expanded, indiscriminately articulated plenum of images that exceed capture in the form of photographic or perceptual “objects.” Post-cinema’s cameras thus mediate a nonhuman ontology of computational image production, processing, and circulation, where these images’ discorrelation from human perceptibility signals an expansion of the field of material affect: beyond the visual or even the perceptual, the images of post-cinematic media operate and impinge upon us at what might be called a “metabolic” level.

Post-Cinematic Affect: Post-Continuity, the Irrational Camera, Thoughts on 3D

Last summer (2012), I participated in a roundtable discussion with Therese Grisham and Julia Leyda on the subject of “Post-Cinematic Affect: Post-Continuity, the Irrational Camera, Thoughts on 3D.” Drawing on Steven Shaviro’s book Post-Cinematic Affect, and looking at films such as District 9, Melancholia, and Hugo, the roundtable appeared in the multilingual online journal La Furia Umana (issue 14, 2012). For some reason, the LFU site has been down for a few weeks, and I have no information about whether or when it will be back up. Accordingly, I wanted to point out for anyone who is interested that you can still find a copy of the roundtable discussion here (as a PDF on my academia page). Enjoy!

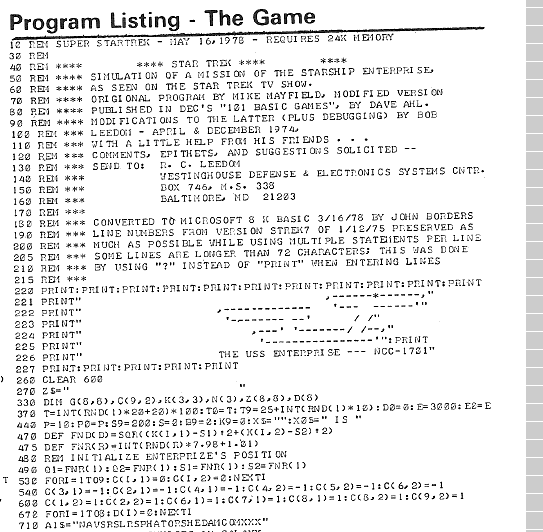

Super Star Trek and the Collective Serialization of the Digital

Here’s a sneak peek at something I’ve been working on for a jointly authored piece with Andreas Jahn-Sudmann (more details soon!):

[…] whereas the relatively recent example of bullet time emphasizes the incredible speed of our contemporary technical infrastructure, which threatens at every moment to outstrip our phenomenal capacities, earlier examples often mediated something of an inverse experience: a mismatch between the futurist fantasy and the much slower pace necessitated by the techno-material realities of the day.

The example of Super Star Trek (1978) illuminates this inverse sort of experience and casts a media-archaeological light on collective serialization, by way of the early history of gaming communities and their initially halting articulation into proto-transmedia worlds. Super Star Trek was not the first – and far from the last – computer game to be based on the Star Trek media franchise (which encompasses the canonical TV series and films, along with their spin-offs in comics, novels, board games, role-playing games, and the larger Trekkie subculture). Wikipedia lists over seventy-five Trek-themed commercial computer, console, and arcade games since 1971 (“History of Star Trek Games”) – and the list is almost surely incomplete. Nevertheless, Super Star Trek played a special role in the home computing revolution, as its source code’s inclusion in the 1978 edition of David Ahl’s BASIC Computer Games was instrumental in making that book the first million-selling computer book.[i] The game would continue to exert a strong influence: it would go on to be packaged with new IBM PCs as part of the included GW-BASIC distribution, and it inspired countless ports, clones, and spin-offs in the 1980s and beyond.