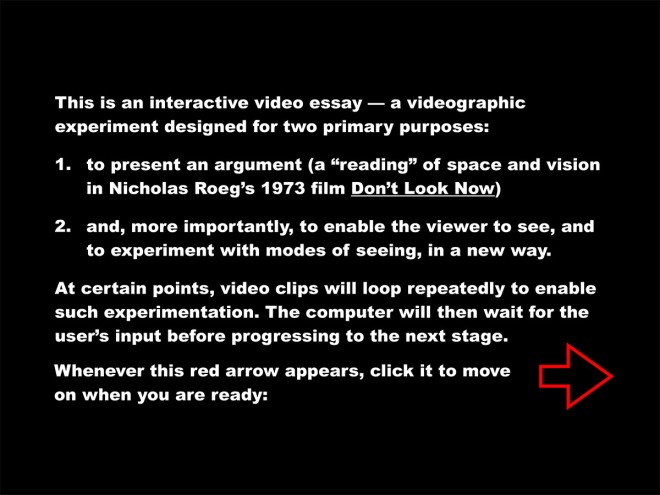

Back in 2016, my experimental video essay “Don’t Look Now: Paradoxes of Suture” was published in the open access journal [in]Transition: Journal of Videographic Film and Moving Image Studies. This was an experiment with the limits of the “video essay” form, and a test to see if it could accommodate non-linear and interactive forms (produced with some very basic javascript and HTML/CSS so as to remain accessible and viewable even with updates to web infrastructures). Seeing as the interactive video essay was accepted and published in a peer-reviewed journal devoted, for the most part, to more conventional linear video essays, I considered the test passed. (However, since the journal has recently moved to a new hosting platform with Open Humanities Library, the interactive version is no longer included directly on the site, instead linking to my own self-hosted version here.)

But even if the test was passed in terms of publication, the peer reviewers noted that the experiment was not altogether successful. Richard Misek noted that the piece was “flawed,” qualifying nevertheless that “the work’s limitations are integral to its innovation.” The innovation, according to Misek, was to point to a new way of looking and doing close analysis:

“Perhaps one should see it not as a self-contained video essay but as a walk-through of an early beta of an app for viewing and manipulating video clips spatially. Imagine, for example… The user imports a scene. The app then splits it into clips and linearly spatializes it, perhaps like in Denson’s video. Each clip can then be individually played, looped, or paused. For example, the user can scroll to, and then pause, the in points or out points for each clip; or just play two particular shots simultaneously and pause everything else. Exactly how the user utilizes this app depends on the film and what they hope to discover from it. The very process of doing this, of course, may then also reveal previously unnoticed themes, patterns, or equivalences. Such a platform for analyzing moving images could hugely faciliate close formal analysis. I imagine a moving image version of Warburg’s Mnemosyne Atlas – a wall (/ screen) full of images, all existing in spatial relation with each other, and all in motion; a field of connections waiting to be made.

“In short, I think this video points towards new methods of conducting close analysis rather than new methods of presenting it. In my view, the ideal final product would not be a tidied-up video essay but an app. I realize that, technically and conceptually, this is asking a lot. It would be a very different, and much larger project. For now, though, this video provides an inspiring demo of what such an app could help film analysts achieve.”

Fast-forward eight years, to a short article on “Five Video Essays to Close Out May,” published on May 28, 2024 in Hyperallergic. Here, author Dan Schindel includes a note about an open-source and open-access tool, the Interactive Video Grid by Quan Zhang, that is inspired by my video essay and aims to realize a large part of the vision laid out by Misek in his review. As one of two demos of the tool, which allows users to create interactive grids of video clips for close and synchronous analysis, Zhang even includes “Don’t Look Now: Paradoxes of Suture. A Reconfiguration of Shane Denson’s Interactive Video Essay.”

I’m excited to experiment with this in classrooms, or as an aid in my own research. And I can imagine that additional development might point to further innovations in modes of looking. For example, what if we make the grid dynamic, such that the clips can be dragged and rearranged? Or added and removed, resized, slowed down or speeded up, maybe even superimposed on one another? Of course, many such transformations are already possible within nonlinear digital editing platforms — but it’s only the editing process that is nonlinear, while the operations imagined here only become visible in the outputted products that are, alas, still linear videos.

Like my original video, Zhang’s new tool might also be “flawed” and in need of further development, but it is successful in terms of pointing to new ways of looking that go beyond linear forms of film and video and that take fuller advantage of the underlying nonlinearity of digital media. The latter, I would suggest, are anyway transforming our modes of visual attention, so it seems only right that we should experiment self-reflexively and probe the limits of the new ways of looking.